Know-how reporter

Getty Photographs

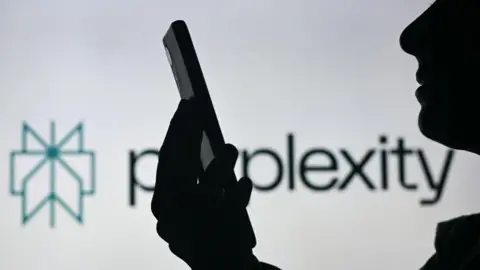

Getty PhotographsThe BBC is threatening to take authorized motion towards a synthetic intelligence (AI) agency whose chatbot the company says is reproducing BBC content material “verbatim” with out its permission.

The BBC has written to Perplexity, which relies within the US, demanding it instantly stops utilizing BBC content material, deletes any it holds, and proposes monetary compensation for the fabric it has already used.

It’s the first time that the BBC – one of many world’s largest information organisations – has taken such motion towards an AI firm.

In an announcement, Perplexity stated: “The BBC’s claims are only one extra a part of the overwhelming proof that the BBC will do something to protect Google’s unlawful monopoly.”

It didn’t clarify what it believed the relevance of Google was to the BBC’s place, or supply any additional remark.

The BBC’s authorized risk has been made in a letter to Perplexity’s boss Aravind Srinivas.

“This constitutes copyright infringement within the UK and breach of the BBC’s phrases of use,” the letter says.

The BBC additionally cited its analysis printed earlier this yr that discovered 4 standard AI chatbots – together with Perplexity AI – had been inaccurately summarising information tales, together with some BBC content material.

Pointing to findings of great points with illustration of BBC content material in some Perplexity AI responses analysed, it stated such output fell in need of BBC Editorial Pointers across the provision of neutral and correct information.

“It’s subsequently extremely damaging to the BBC, injuring the BBC’s repute with audiences – together with UK licence payment payers who fund the BBC – and undermining their belief within the BBC,” it added.

Net scraping scrutiny

Chatbots and picture turbines that may generate content material response to easy textual content or voice prompts in seconds have swelled in reputation since OpenAI launched ChatGPT in late 2022.

However their fast development and bettering capabilities has prompted questions on their use of current materials with out permission.

A lot of the fabric used to develop generative AI fashions has been pulled from an enormous vary of internet sources utilizing bots and crawlers, which mechanically extract website knowledge.

The rise on this exercise, often called internet scraping, not too long ago prompted British media publishers to affix calls by creatives for the UK authorities to uphold protections round copyrighted content material.

In response to the BBC’s letter, the Skilled Publishers Affiliation (PPA) – which represents over 300 media manufacturers – stated it was “deeply involved that AI platforms are presently failing to uphold UK copyright legislation.”

It stated bots had been getting used to “illegally scrape publishers’ content material to coach their fashions with out permission or cost.”

It added: “This apply instantly threatens the UK’s £4.4 billion publishing business and the 55,000 individuals it employs.”

Many organisations, together with the BBC, use a file referred to as “robots.txt” of their web site code to attempt to block bots and automatic instruments from extracting knowledge en masse for AI.

It instructs bots and internet crawlers to not entry sure pages and materials, the place current.

However compliance with the directive stays voluntary and, in accordance with some reviews, bots don’t all the time respect it.

The BBC stated in its letter that whereas it disallowed two of Perplexity’s crawlers, the corporate “is clearly not respecting robots.txt”.

Mr Srinivas denied accusations that its crawlers ignored robots.txt directions in an interview with Quick Firm final June.

Perplexity additionally says that as a result of it doesn’t construct basis fashions, it doesn’t use web site content material for AI mannequin pre-training.

‘Reply engine’

The corporate’s AI chatbot has turn out to be a preferred vacation spot for individuals on the lookout for solutions to frequent or complicated questions, describing itself as an “reply engine”.

It says on its web site that it does this by “looking the net, figuring out trusted sources and synthesising info into clear, up-to-date responses”.

It additionally advises customers to double test responses for accuracy – a typical caveat accompanying AI chatbots, which could be identified to state false info in a matter of reality, convincing manner.

In January Apple suspended an AI function that generated false headlines for BBC Information app notifications when summarising teams of them for iPhones customers, following BBC complaints.